Stock market trend prediction using deep neural network via chart analysis: a practical method or a myth?

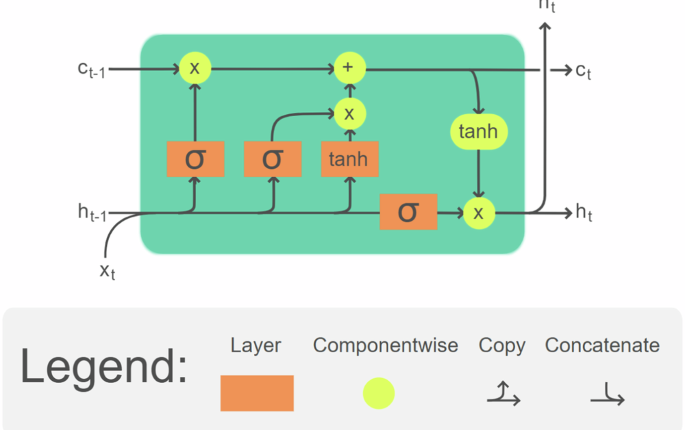

Taking into account the limitations that have been observed in LSTM methods, we propose a structure that addresses the shortcomings of LSTM models. To mitigate the forgetting phenomenon, a transformer-based (Vaswani et al. 2017) model is used. Transformer utilizes a matrix that incorporate all previous data in a sequence, determining correlated values of the data. Therefore, it does not suffer from forgetting, however, all data segments should be provided as input, confining the model to a specific attention window (this study, the historical days of the stock). The cornerstone and the key advantage of the transformer despite its memory size (n2) compared to LSTM (n.log(n)), is its ability for parallel computation. This capability has…